eksctl is a simple CLI tool for creating clusters on AWS EKS for a cluster of open source Kubernetes service for EC2 or Fargate nodes. It invokes a CloudFormation and generate a few stacks to create some components in AWS such as VPC, a control place, worker nodes depending on your case.

eksctl create cluster command must be the first command you will run to spin up a Kubernetes cluster in AWS.

This is a rough guide what this command does exactly and explains various patterns to use this command in the following section. Here’s a list of patterns how to use this command when you prefer to EC2 hosts for Kubernetes. If you prefer to use Fargate to run your pods, need to create a Farget profile.

Getting started with AWS Fargate using Amazon EKS

- Create a default set, VPC, a control plane and worker nodes

- Create a control plane and worker nodes with the existing VPC

- Create VPC, a control place but without worker nodes

Why do we want to do these ?? Here are some possible reasons.

- Existing VPC, subnets are needed to integrate with a cluster, need to change a number of public, private subnets, the security group

- A cluster needs more node groups, managed groups in 1 cluster, need another node group additionally under the cluster

Create a default set, VPC, control place and worker nodes

In default eksctl create cluster does a lot of tedious works that are required to spin up a Kubernetes cluster in AWS. It creates all you needs to interact with AWS EKS in first place. This is a good abstraction for users but it would be better to understand what is happening behind the curtain.

Let’s delve into the behavior of eksctl create cluster further. There are 3 main components that will be created in CloudFormation with this command.

No 1, VPC. Total 8 subnets (3 public, 3 private and 2 reserve) will be created in one VPC in 192.168.0.0/16 (default cidr) and the initial node group is created in public subnets, with SSH access disabled. Internet gateway and NAT gateway are also included in a stack. You can change NAT gateway’s configuration Disabled, Single (Default), and HighAvailable.

No 2, Kubernetes control plane (master nodes). A control plane is always created with AWS EKS cluster such as master nodes, and related processes will run automatically in there, for example scheduler, controller-manager, etcd. AWS EKS is the managed service so that we don’t need to care about clustering of master nodes, its availability and scalability, which is fabulous.

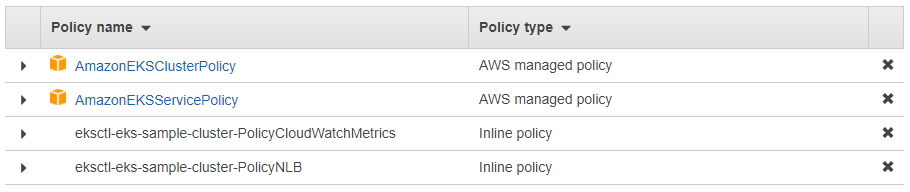

AmazonEKSClusterPolicy needs to be attached to the role eksClusterRole. There will be also a security group created for accessing to your cluster. In older version of AWS EKS, you needed to create this security group manually.

Therefore if you issue kubectl get componentstatuses command on the created cluster, it returns <unknown> AGE for scheduler, contoroller-manager and etcd-0. These are all managed by AWS EKS.

$ kubectl get componentstatuses

NAME AGE

scheduler <unknown>

controller-manager <unknown>

etcd-0 <unknown>No 3, a node group. The command will create 2 hosts with m5.large in an initial node group in default with the security group for accessing to nodes and the IAM role. A node group is the term for worker nodes we’re using in the normal Kubernetes world. These hosts will be located in the provisioned public subnets in default if you didn’t change the configuration.

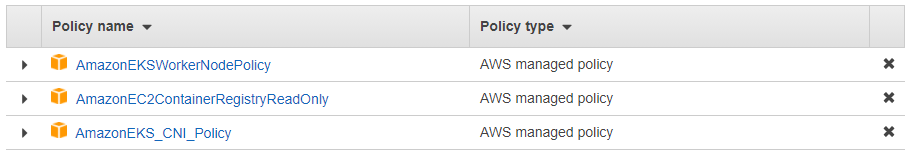

NodeInstanceRole is created and attached to a node group’s hosts. It must have 3 policies at least according to the document.

Here are the command and the sample yaml file for provisioning, a VPC with default settings, a control plane and 1 node group with 2 x t2.micro instances.

$ eksctl create cluster -f cluster-eks-sample.yamlYou can confirm the created cluster and the node group in the following commands.

$ eksctl get cluster

NAME REGION

eks-sample ap-northeast-1

$ eksctl get nodegroup --cluster eks-sample

CLUSTER NODEGROUP CREATED MIN SIZE MAX SIZE DESIRED CAPACITY INSTANCE TYPE IMAGE ID

eks-sample ng-1 2020-10-05T06:22:55Z 2 2 2 t2.micro ami-02e124a380df41614

eks-sample ng-2 2020-10-05T06:22:55Z 2 2 2 t2.micro ami-02e124a380df41614Create a control plane and worker nodes with the existing VPC

If you want to configure a VPC different from a default dedicated VPC eksctl create cluster command provisions, or need to leverage the existing VPC to get shared access to some resources in inside the existing VPC with other AWS resources.

If you specify VPC information from eksctl command or include it in yaml file shown like below, you’ll be able to use the existing VPC. Prerequisite for subnets and VPC is mentioned in the eksctl’s site.

Here’s the example how to configure the existing VPC in equivalent config yaml file. In here the command creates 1 node group with 2 hosts in the existing public subnets.

$ eksctl create cluster -f cluster-eks-sample-vpc.yamlCreate a VPC, control plane but without worker nodes

You can add an additional node group under the existing cluster or create a cluster empty then add your node groups under the cluster.

In this experiment, I tested creating an empty cluster named eks-sample and configure additional 2 node groups in the created cluster.

Here’s the command to create an empty cluster without any nodegroup. Then next we will add 2 node groups in yaml file.

$ eksctl create cluster --name eks-sample --without-nodegroup

$ eksctl get nodegroup --cluster eks-sample

No nodegroups found$ eksctl create nodegroup --config-file=cluster-eks-sample-nodegroup.yamlNow you can confirm the created cluster and the node group in the following commands.

$ eksctl get nodegroup --cluster eks-sample

CLUSTER NODEGROUP CREATED MIN SIZE MAX SIZE DESIRED CAPACITY INSTANCE TYPE IMAGE ID

eks-sample ng-1-workers 2020-10-05T11:31:16Z 2 2 2 t2.micro ami-02e124a380df41614

eks-sample ng-2-builders 2020-10-05T11:31:16Z 2 2 2 t2.micro ami-02e124a380df41614To wrap up, eksctl create cluster command is a good start to work with AWS EKS cluster and nodes by eliminating tedious works about Kuebernetes. It is important to perceive what this tool abstract for both Kubernetes and AWS resources to avoid mis-configurations and mitigate security risks beforehand in my understanding.