Sentiment Analysis is used to interpret and evaluate customer’s reactions for products, reviews and feedbacks for services from text information. In sentiment analysis it usually detects polarity (e.g. a positive or negative opinion) within each text. To build sentiment analysis system, we need to understand what kind of sentiment analysis it is, how to process given documents, sentences, paragraphs as text and what model we create to generate a classification. I will explain what to consider broadly and show some code examples for sentiment analysis by using Python libraries (NLTK and scikit-learn).

Sentiment analysis – Wikipedia

Sentiment analysis (also known as opinion mining or emotion AI) refers to the use of natural language processing, text analysis, computational linguistics, and biometrics to systematically identify, extract, quantify, and study affective states and subjective information.

Here’s a list of components what to consider for system. I’ll cover top 4 items in the following sections in bold. I’ll use Anaconda3 and Jupyter notebook for this experiment.

- What we want to know? What sentiment analysis is needed? For example, polarity, emotions, aspect-based, intentions, and so on.

- What is data and how to label it for the supervisor model?

- How to process documents and sentences, feature engineering?

- What supervised algorithm should be used and how to evaluate it?

- How to obtain new data and give an answer to users?

- How to retrieve periodic data and retrain the existing model?

What we want to know? What sentiment analysis is needed?

An erratic style of diplomacy and administration of Donald Trump has been with Twitter. His tweets affected market price, foreign affairs, scandalous things, which is crazy. So let’s assume that we want to evaluate Donald Trump’s or somebody’s tweets if a tweet is positive or negative.

For example his or somebody posted a few consecutive tweets that are positive, it might be a good indicator for relevant areas. It could be financial markets or somebody’s emotion if those tweets were personal related things.

Anyway I’d like to pick the most simple sentiment analysis Polarity type that detects whether a tweet is positive or negative. It’s so simple and elementary. Somebody throws new tweet then it returns that tweet looks positive or negative from the supervised model automatically.

What is data and how to label it for the supervisor model?

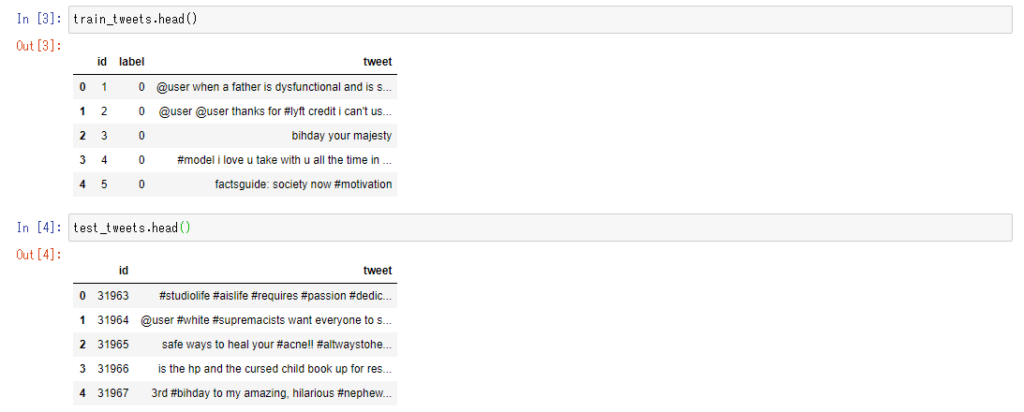

I found tweet data (train and test devided) online and uploaded in github as csv. It consists of the columns id, label and raw tweet sentence in string. There are 31962 tweets in train data set already. You may notice that label 0 is negative and then the label 1 is positive in this data set.

train_tweets = pd.read_csv('./train_tweets.csv')

test_tweets = pd.read_csv('./test_tweets.csv')

Let’s visualize this data in countplot between the labels with seaborn library. We have only 2242 positive tweets only while 29720 negative tweets. It looks so imbalanced. We have to tackle this problem before processing the data. You wonder why is it a problem?

Let’s say we have a machine learning model to classify everything (every tweet) as negative the label 0 in this data, then this classifier seems understanding most of sentiments in tweet texts because most of data are negative in this circumstance.

sns.countplot(x='label', data = train_tweets)

To cope with imbalanced data, I choose one of the oversampling methods to generate synthetic samples in the label 1. As a result, the number of label 1 will increase to 29720 that is the same amout of the negative label. But I don’t do that process before preprocessing and convert the tweets to features.

How to process documents and sentences, feature engineering?

We’ve got raw tweets so we need to do preprocessing and vectorize the instances for algorithm to understand and learn patterns. Here are 5 sub-sections I will follow for data processing and feature engineering.

- Removal of punctuations

- Removal of commonly used words (stopwords)

- Lemmatization

- Vectorization

- Oversampling (SMOTE)

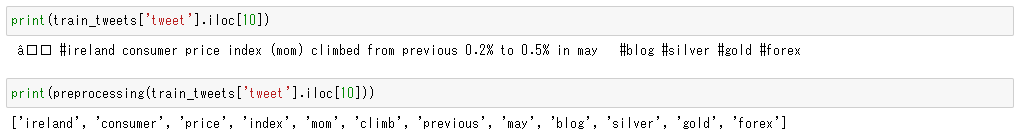

Removal of punctuations such as %, hashtag #, and @ in tweet is the first step and the second we can remove the frequent used words (stopwords) and at last we will lemmatize the words in the lists. As a result we will be able to have clean lists of lemmatized words without any distractions such as punctuations and stopwords. Let’s pack these 3 features in 1 function with an argument (a raw tweet). We use NLTK library for this purpose. Here’s the sample code. I used TextBlob to remove punctuations.

import nltkLet’s see how it works with the sample. Print the 10th item of train data in Python interpreter, then next print the preprocessed 10th item, how does it look like? We don’t see punctuations % and #, no stopwords such as in and to in the original tweet. Also unfamilier characters have been removed from the sentence. Sweet.

Let’s move to vectorize the lemmatized tweets. Why vectorize? Because machine learning algorithms can understand only numeric values, so words in string, sentences in text, categorical data must be preprocessed into numbers in advance to train in machine learning algorithms. There are 3 major vectorizing ways for text 1) Frequency Vectors, 2) One-hot encoding, 3) Tfidf Vectors. Let’s use TfidfVectorizer in scikit-learn library.

Text Vectorization and Transformation Pipelines

from sklearn.feature_extraction.text import TfidfVectorizerThere are a lot of attributes in TfidfVectorizer, here’s the example code.

We’ve got vectorized train data now. It’s time to apply SMOTE to oversample the positive tweets to the same level of the negative tweets. Recall that we have only 2242 positive tweets compared to 29720 negative tweets.

X_resampled are transformed vectors of train data and y_resampled is the same label 0 or 1 but resampled with SMOTE. In the next section we will use machine learning algorithm to train several models and evaluate some scores such as accuracy, precision, recall and confirm confusion matrix.

What supervised algorithm should be used and how to evaluate it?

Multinomial naive bayes is famous for text classification but suitable for discrete values such as word counts. However, in the official site, it’s said that fractional counts such as tf-idf may also work. I choose 3 algorithms that are implemented in Scikit-learn, Multinomial naive bayes, RandomForest classifier, GradientBoosting classifier but you may change the algorithm if you want. Here is the example code and it displays the results, accuracy score, confusion matrix and classification report for each algorithm.

RandomForest classifier looks the best algorithm for this model. However the result might flip if we change the algorithms or tune the parameters using GridSearchCV and other preprocessing techniques for the same data. I did not cover any other preprocessing techniques and optimizing hyper parameters in the model. In the next section is optional and it covers how to predict polarity for new tweet that hasn’t been in data set.

(Optional) Test the generated model

It’s time to play with real world data. I pick 2 Donald Trump’s tweets from his timeline. Let’s use our model to predict tweet’s polarity whether it is positive or negative from vectorized words.

Here’s the tweet for experiment.

Here’s the Jupyter notebook of all samples in github for your reference.

How to build Sentiment Analysis with NLTK and Scikit-learn in Python